Disruptions pose a major risk to burning plasma tokamaks. Two possible solutions are: (1) design scenarios and trajectories to rapidly de-energize the plasma without disrupting it, and (2) develop active control strategies to avoid disruptions in real-time. The key goals of this project are to develop useful tools for scenario design for SPARC and demonstrate the possibilities afforded by active disruption avoidance on existing machines, like TCV hosted by EPFL-SPC in Lausanne, Switzerland.

Fortunately, the toolboxes of optimal control and reinforcement learning provide many tools to achieve both of these solutions. However, in the context of plasma control, applying these tools is extremely challenging due to the lack of good dynamics models, much less dynamics models that satisfy the necessary properties to apply optimal control and reinforcement learning.

To address this challenge, prior works have developed purely data-driven dynamics models using standard sequence-to-sequence architectures such as recurrent neural networks (RNNs) and resnets. However, the performance of these models is limited by our relative paucity of data. For devices like SPARC, we need to get good solutions from a small number of shots.

What can we do about this? In magnitude, this problem is uniquely challenging. However, in spirit, this isn’t a new challenge; other control systems have had to contend with similar problems. Some strategies that have worked include:

- Build abstraction layers (e.g. develop low level controllers we trust, and have the smarter controllers send commands to lower level controllers);

- System identification (i.e. learn structured dynamics models from data);

- Design trajectories and controllers that will succeed despite physics uncertainty.

In this project, the application of all of these strategies is explored using new tools such as neural differential equations and differentiable simulation.

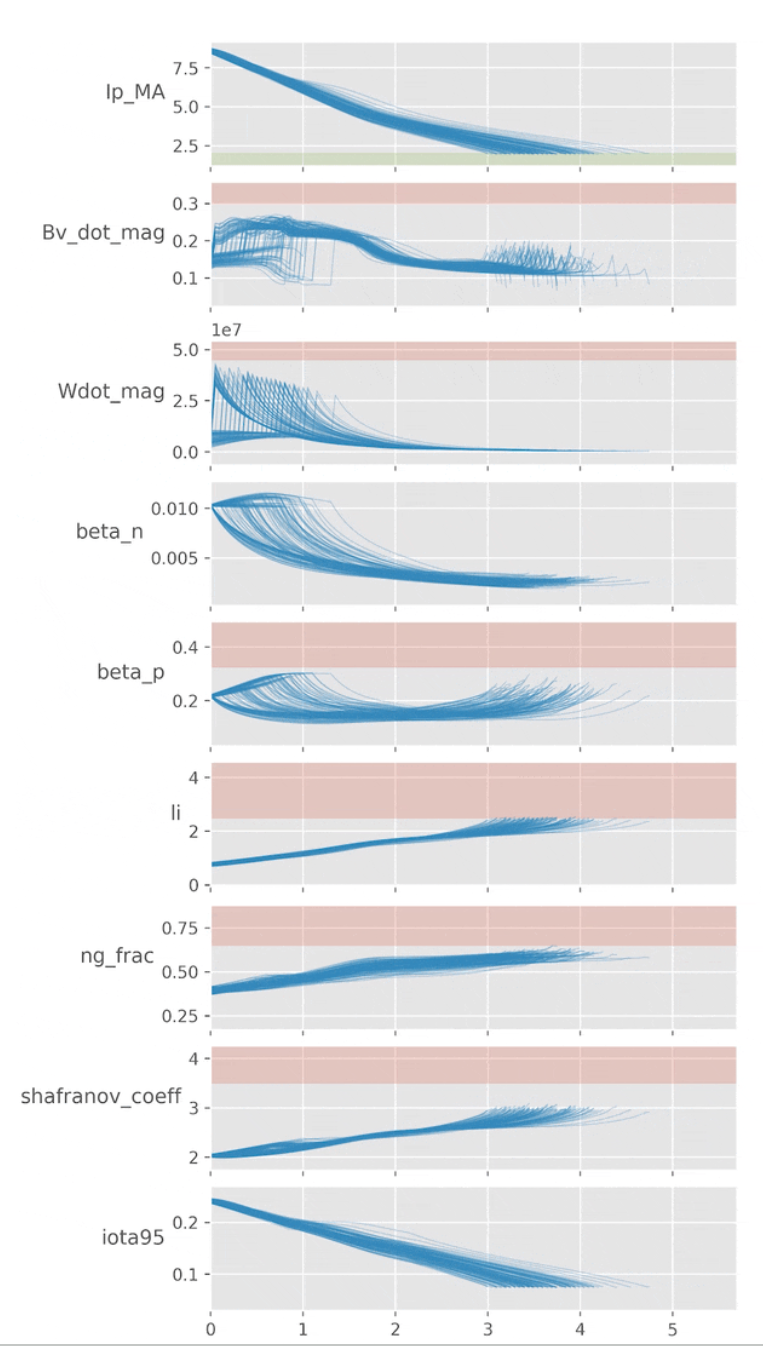

The plot below shows a reinforcement learning (RL) controller trying to avoid the user-specified red “danger zones” while reaching the “green zone” on multiple simulators with different physics at the same time. The simulators themselves are hybrid physics-ML models. The resulting RL controller can be useful as an assistant for designing scenarios to program into a machine, but may also be useful for real-time disruption avoidance.

Solutions are being explored for an active deployment in closed loop on existing machines, like TCV, and to design scenario libraries based on simulations for SPARC.

The trajectory optimization team is led by graduate student Allen Wang (MIT AeroAstro), under the supervision of Dr. Cristina Rea and Prof. Chuchu Fan (MIT AeroAstro). Collaborators include Dan Boyer, Devon Battaglia, and Ryan Sweeney (CFS), Federico Felici (Deepmind), Alessandro Pau (EPFL-SPC), and colleagues from the MIT REALM Lab.