Disruptions are difficult to model using physical simulations due in part to the heterogeneity of causes that can lead to instability, the multi-scale nature of plasma dynamics, and to a wealth of unobserved factors that affect known physical dynamics. Thus, there is an emerging literature on methods other than “first principle models” for disruption prediction based on advanced statistical modeling and Machine Learning (ML).

Prior work has investigated the use of random forest models, convolutional LSTMs such as the Hybrid Deep Learner (HDL), and other neural methods. However, none of these models have the desired accuracy for commercial scale tokamaks where disruption prediction accuracy must be nearly flawless (>95% true positive rate). Additionally, it is unclear how long the “memory” of the plasma is – while some argue that as little as 200 milliseconds of history is needed to capture the evolution of the plasma temperature and density, others argue that certain instabilities or tokamak control errors that occur near the start of a seconds-long shot may impact the end behavior of the shots.

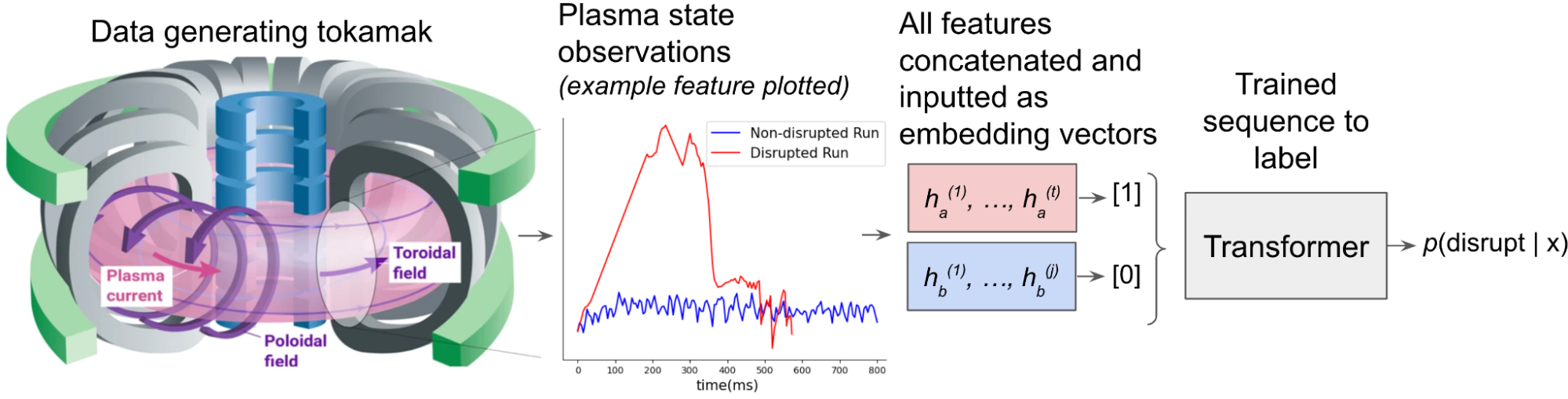

This project focuses on the development of two new ML models for disruption prediction: (1) an autoregressive GPT2-like transformer model and (2) a continuous convolutional neural network (CCNN) model. The autoregressive transformers are augmented by pretraining on a next-state prediction task, and a curriculum of data augmentations; this approach might hold the promise of taking explicitly into account the long-term nature of the plasma. On the other hand, the continuous CNN is a variation of a CNN which infers an underlying, continuous function made up by multiplicative anisotropic gabor basis functions. The CCNN may be a promising algorith as it is sample rate independent, and can correctly infer an underlying physical process. The development of these models leverages currently available multi-machine datasts of three different tokamaks, namely Alcator C-Mod, DIII-D, and EAST tokamaks.

Thanks to a successful partnership with Eni and their data science team, a benchmarking platform for testing ML models against is under development, defining statistically robust performance metrics for model comparison and validation. Preliminary investigations show that the CCNN model is outperforming baselines when trained and tested with single-tokamak data, while the transformer model is outperforming when trained and tested across different tokamaks.

The ML models benchmarking team is currently led by postdoctoral associate Lucas Spangher, under Dr. Cristina Rea’s supervision and with the collaboration of Jinxiang Zhu (MIT). Collaborators include Will Arnold (KAIST), Francesco Cannarile and Matteo Bonotto (Eni).