Disruption Research publication in Journal of Fusion Energy

Risk-Aware Framework Development for Disruption Prediction

Disruptions pose a considerable threat to the operation of future high-performance tokamaks. The plasma control system (PCS) must be able to both anticipate an oncoming disruption and take action to address it in real time. However, there are multiple actions available to the PCS, each with their own set of actuation times and associated risks. Giving the estimated time until a disruption takes place to the PCS will allow it to make optimal decisions to minimize damage to the device. Making these time-to-event estimations can be done using a field of statistics called survival analysis.

While survival analysis has been applied to disruption prediction in the past, there was not an in-depth study that compares the performance of survival analysis against presently-used machine learning methods. In our recent publication in the Journal of Fusion Energy, titled “Risk-Aware Framework Development for Disruption Prediction: Alcator C-Mod and DIII-D Survival Analysis” and led by graduate student Zander Keith, we evaluated the performance of several types of models when applied to the tasks of disruption prediction (yes/no will a disruption happen) and time-to-event estimation (how long until the plasma disrupts).

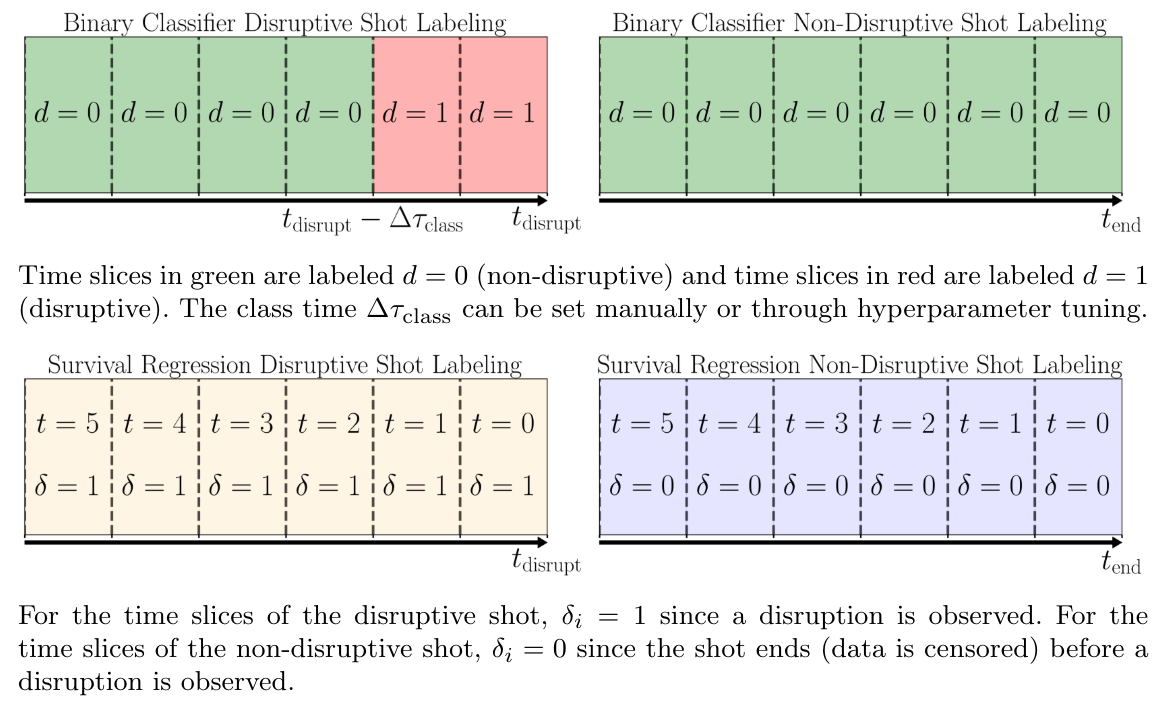

Disruption prediction with machine learning is typically done using binary classification where most data is labeled as non-disruptive, and data right before a disruption is labeled as disruptive. While one can use a binary classifier to make time-to-event estimations in the survival analysis framework, it requires making many assumptions on the evolution of risk over time. Alternatively, there exist models which utilize a different type of labeling called survival regression. This approach labels whether or not an event was observed and the time until the event takes place. This is a more natural description of time series data and can be easily applied to both disruption prediction and time-to-event estimation using survival analysis.

Using data from the Alcator C-Mod and DIII-D tokamaks, we benchmarked performance of several types of models as both disruption predictors and time-to-event estimators. For prediction, this comparison was done using the widespread area under receiver operating characteristic curve (AUROC) metric, as well as metrics which take into account typical warning time. For time-to-event estimation, we looked at the accuracy of the output value compared to an ideal value for the last time of the shot.

There are three key findings from this work:

- Survival regression models can achieve longer warning times than binary classifiers at similar AUROC performance.

- Survival regression models have improved accuracy in estimating time-to-event than previously studied models.

- The chosen metric for hyperparameter tuning a model is very important part of the design process.

In this study, we benchmarked the performance of models when trained and tested on an existing device. This approach requires a large amount of experimental data for the device the model is deployed on. Future work will investigate developing survival analysis models for the early SPARC campaign, where there will be minimal experimental data. This may involve using data from many existing devices combined with simulation of SPARC scenarios.

Another area of future work is developing better metrics to use in hyperparameter tuning and model selection. While AUROC is widely reported, it includes performance in regions with high false positive rate, which is completely irrelevant to SPARC operation. In the future we plan to collaborate with the SPARC controls team to develop metrics that prioritize performance which is relevant to tokamak operation.

Enjoy Reading This Article?

Here are some more articles you might like to read next: